The amount of "Used" stickers on this book was a little much. Otherwise it is an amazing read with the author trying his best not to show his own opinions too often. It all honesty I prefer reading this book to listening to my professors lectures any day. This book also had a few annotations, but were in pencil so nothing serious! In conclusion, amazing book, was just sent quite an aged copy.

Sunday, September 28, 2014

Two star

It's not good to get only two stars out of five, but this isn't really such a bad review after all:

Good reason

This post was originally going to be called "Kids these days," so be warned that what follows is likely to be cranky nonsense. I'm writing in response to a couple of things. One is a student in my poverty course (we are discussing distributive justice at the moment) who said, as if to save us all a lot of wasted effort, that the thing about this stuff is that it's all normative. He seemed to mean that because it is normative there is no point discussing or thinking about it. De gustibus non disputandum, and of course the normative is all a matter of gustibus. I say 'seemed' because he was unable to say what 'normative' meant. It was almost as if he had been taught that 'normative' means impossible (or at best pointless) to think or talk about. A colleague at another school who also teaches a course on poverty tells me that this is the biggest obstacle he faces. Students just don't accept that, or see how, one can reason about questions of justice, right and wrong, etc.

The other thing that has set me off is a visit to the theatre on Monday night. The performance was not the greatest I have ever seen, and some people in the audience got restless. One person near me laughed at all the most highly dramatic points, which was understandable but distracting. Perhaps she couldn't help it, or didn't realize that the play was not a comedy. The two people behind me talked throughout the second half of the play, seeming to think that it was OK as long as they whispered and so did not drown out the voices of the actors. This too was distracting, and the last thing you need when trying harder than usual to suspend disbelief. It was annoying, but it also struck me as stupid. Not just thoughtless, but betraying a kind of blindness to the reality of other people. No doubt they had not thought through what it would be like to try to enjoy a performance while people around you were whispering constantly. But also, and one reason for this thoughtlessness, they appeared not to have much sense of other people as subjects, as beings both sentient and mattering.

Sentience and mattering seem to me to go together. Not because the sentient can feel pain. But, roughly speaking, because sentience, consciousness, is a miracle. Failure to appreciate this is a kind of mental dullness. I ought to say more about this, though, which is not easy to do. One aspect of what I mean is related to the fact that anyone who appreciates a great work of art will care about its treatment. This, I take it, is analytic. And it's not that people are great works of art, but we are like great works of art. What a piece of work is a man! and all that. The moral importance of human beings is something like self-evident.

Another part of my idea is that we are people. Whatever other people are is what we are (so far as we are people). Tat tvam asi. That you are in that seat and I am in mine, or any of the other individuating differences between us, is obviously irrelevant at some, important, level. Moral equality is self-evident too, in other words.

So bad behavior is a kind of stupid behavior. And the normative is something we can reason about, however difficult it might be to do so. We just need the right sense of reason, one that has more to do with reasonableness than with means-end reasoning. It's a sense of reason that includes the ability to empathize and to see (but not exaggerate) the value of civility. (This point seems relevant to, but is not intended to be about, a bunch of debates going on elsewhere on philosophy blogs.)

The other thing that has set me off is a visit to the theatre on Monday night. The performance was not the greatest I have ever seen, and some people in the audience got restless. One person near me laughed at all the most highly dramatic points, which was understandable but distracting. Perhaps she couldn't help it, or didn't realize that the play was not a comedy. The two people behind me talked throughout the second half of the play, seeming to think that it was OK as long as they whispered and so did not drown out the voices of the actors. This too was distracting, and the last thing you need when trying harder than usual to suspend disbelief. It was annoying, but it also struck me as stupid. Not just thoughtless, but betraying a kind of blindness to the reality of other people. No doubt they had not thought through what it would be like to try to enjoy a performance while people around you were whispering constantly. But also, and one reason for this thoughtlessness, they appeared not to have much sense of other people as subjects, as beings both sentient and mattering.

Sentience and mattering seem to me to go together. Not because the sentient can feel pain. But, roughly speaking, because sentience, consciousness, is a miracle. Failure to appreciate this is a kind of mental dullness. I ought to say more about this, though, which is not easy to do. One aspect of what I mean is related to the fact that anyone who appreciates a great work of art will care about its treatment. This, I take it, is analytic. And it's not that people are great works of art, but we are like great works of art. What a piece of work is a man! and all that. The moral importance of human beings is something like self-evident.

Another part of my idea is that we are people. Whatever other people are is what we are (so far as we are people). Tat tvam asi. That you are in that seat and I am in mine, or any of the other individuating differences between us, is obviously irrelevant at some, important, level. Moral equality is self-evident too, in other words.

So bad behavior is a kind of stupid behavior. And the normative is something we can reason about, however difficult it might be to do so. We just need the right sense of reason, one that has more to do with reasonableness than with means-end reasoning. It's a sense of reason that includes the ability to empathize and to see (but not exaggerate) the value of civility. (This point seems relevant to, but is not intended to be about, a bunch of debates going on elsewhere on philosophy blogs.)

Thursday, September 25, 2014

Declining decline?

While the philosophy blogosphere collapses, or at least undergoes some kind of spasm, I wonder whether this is happening because of a general sense that we are in the last days of the philosophy profession (or what Brian Leiter called "the so-called philosophy 'profession' (it's mostly a circus"--he seems to have removed the post, but if you search for "philosophy profession circus" you can find the fragment I have quoted here).

With that as background I was just sent an article designed to help college instructors whose students are not doing the assigned reading. It contains fourteen handy tips, the first three of which are as follows:

I'm not saying it's the end of the world, but if anyone ever wonders whether or how dumbing down occurs then this provides a pretty good example.

With that as background I was just sent an article designed to help college instructors whose students are not doing the assigned reading. It contains fourteen handy tips, the first three of which are as follows:

Tip 1: Not every course is served by requiring a textbook

Consider not having a required textbook...Of course textbooks are not always the best books or readings to assign. But this tip does not mention assigned readings other than textbooks. Recommended readings are suggested instead, at least for some courses. Guess how many students read merely recommended material?

Tip 2: “Less is more” applies to course reading

A triaged reading list should contain fewer, carefully chosen selections, thereby reducing student perception of a Herculean workload...It's all about perception after all.

Tip 3: Aim reading material at “marginally-skilled” students

Assess reading material to determine the level of reading skill students need in order to read the text in a manner and for the ends that the instructor has intended. A text included in the course readings primarily for entertainment purposes, for example, will require a less-strong set of student reading skills than will a text included for content purposes.So entertainment before content.

I'm not saying it's the end of the world, but if anyone ever wonders whether or how dumbing down occurs then this provides a pretty good example.

Tuesday, September 16, 2014

Metacognition

Colleen Flaherty at Inside Higher Ed writes:

This bit is interesting too:

Metacognition is the point at which students begin to think not just about facts and ideas, but about how they think about those facts and ideas.What if they think about facts and ideas badly, and become aware of this? Doe that count as metacognition? I suspect not. I don't think Flaherty (and other people who talk this way) really means what she says. She goes on:

Metacognition has always underpinned a liberal arts education, but just how to teach it has proved elusive. Hence the cottage industry around critical thinking – even in an era when employers and politicians are calling for more skills-based training, competency and “outcomes.”Thinking about thinking, as metacognition is defined elsewhere in the article, has surely not underpinned a traditional liberal arts education. I think that what she means is something like what Dewey calls reflection, and that is basically being rational, or thinking for oneself, rather than swallowing beliefs uncritically. It means, in a nutshell, believing what there is good reason to believe, and knowing what the reasons are (and, perhaps, why they are good). It's the kind of thing that you learn by writing essays rather than taking short answer tests, by being grilled in class rather than lectured at, and by being held to (more or less) objective standards rather than being encouraged to think that every opinion is equally valid. In other words, if critical thinking skills are less than they used to be (and they might not be), then I suspect this is because of large class sizes (and the labor-saving pedagogy they encourage) and perhaps also some sloppy relativism floating around in classes where students do have to write and argue.

This bit is interesting too:

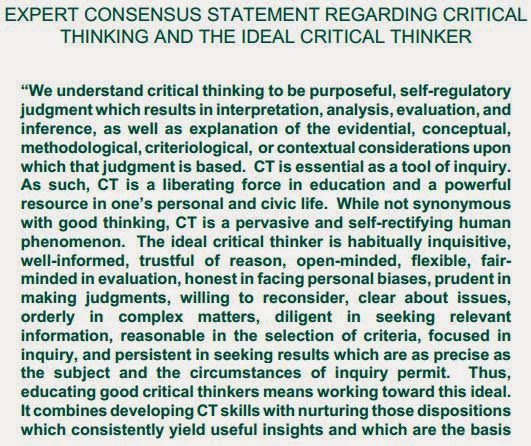

As a possible solution [to the problem of untenured professors being afraid to make their students think critically], Sheffield and his colleagues from the summer institute are hoping to talk with administrators about a way to offer some “immunity or amnesty” for professors who are taking chances to make their curriculums more rigorous, but who fear negative student reactions.Over at the Daily Nous dmf points out that we lack a definition of critical thinking, to which Matt Drabek responds that Sheffield appears at least to be familiar with Peter Facione's work on the subject. The essay by Facione that Flaherty links to seems to be aimed at a non-scholarly audience and deliberately avoids defining 'critical thinking', but ends with this as something like a working definition of the term:

(It really does end like this, I'm not just snipping badly.)

The idea seems to be intellectual virtue. But can such virtue be taught? I would think that "CT skills" can be taught, although not in big lecture classes with multiple-choice tests. And quite possibly not by people worried about being popular with their students. Nurturing the relevant dispositions is another matter. That seems like something that might have to be done outside as well as inside the classroom. It's a matter of socialization. And a kind of socialization that goes against the grain of much contemporary culture.

Saturday, September 13, 2014

Three days in Mexico City

I lost five pounds over ten days in Mexico, and I didn't get ill. I consumed less than I do at home, but I think most of the weight loss came from walking. Certainly I ached more than normal. So the following itinerary might not be for everyone, but it can be done. I was in the state of Veracruz for a week before I went to Mexico City, but that's somewhat off the tourist trail (although if you have a way to get around it is a fantastic place to visit) so I'll leave that part of the trip out.

Sunday: My hotel was conveniently close to the Paseo de la Reforma, a two-mile boulevard dotted with statues and very friendly to cyclists, so I walked there and then up to the cathedral and other buildings on the Zocalo (central square). It's non-stop mass on Sundays but you can go inside and look around without joining in. Outside there are people selling things and native people dancing for tourists. Then on to the Templo Mayor, and then the Diego Rivera murals at the National Palace. Chocolate and churros for lunch before heading all the way down the Paseo de la Reforma to visit the anthropological museum in Chapultepec Park. Drink 1.5 litres of hibiscus-flavored water (not as good as it sounds, but how could it be?) on the way back to the hotel. Dinner at the surprisingly not bad chain restaurant Vips.

Monday: Almost all museums are closed in Mexico City on Mondays, so it's a good day to visit Teotihuacan. I decided to book a tour there, though, and it didn't go until Tuesday, so I headed by metro to the Coyoacan area, where my guidebook describes a walk, which I did. Walked past the closed Frida Kahlo and Leon Trotsky houses, and should have had lunch in this area but instead kept walking until I reached a metro station. Headed up to the Plaza de las Tres Culturas, which involved a bit of a walk through a not very prosperous neighborhood but no one mugged me and the threatened rain stayed away. The Aztec ruins here turned out to be open (and free), which was a nice surprise. Very similar to the Templo Mayor, but without the museum. The church was closed, but for all I know it always is. Then on by metro to the Basilica of Our Lady of Guadalupe, where there was, oddly, an unsuccessful snake charmer (outside the metro, not in the church), and another mass going on. Moving walkways behind and below the priest take you past the miraculous image of the Virgin Mary. Back to Vips for lunch at dinner time, then dinner almost immediately after at a place called Hellfish.

Sunday: My hotel was conveniently close to the Paseo de la Reforma, a two-mile boulevard dotted with statues and very friendly to cyclists, so I walked there and then up to the cathedral and other buildings on the Zocalo (central square). It's non-stop mass on Sundays but you can go inside and look around without joining in. Outside there are people selling things and native people dancing for tourists. Then on to the Templo Mayor, and then the Diego Rivera murals at the National Palace. Chocolate and churros for lunch before heading all the way down the Paseo de la Reforma to visit the anthropological museum in Chapultepec Park. Drink 1.5 litres of hibiscus-flavored water (not as good as it sounds, but how could it be?) on the way back to the hotel. Dinner at the surprisingly not bad chain restaurant Vips.

Tuesday: Bus trip to Teotihuacan (should have gone by public transport and walked to the murals at Tepantitla). Arrived not long after the place opened, but even later in the day it was not very crowded. Spent the morning looking around and climbing pyramids, then off to taste tequila (followed by too much time in a gift shop) and eat lunch. Back in the city I visit the murals in the Palacio de Bellas Artes and have dinner at the old world-seeming Restaurante Danubio, whose walls are covered with framed cartoons and messages from, presumably, happy customers.

Tuesday, September 9, 2014

Differently abled?

So, this post of Jon Cogburn's about ableism. (See also here.) I wanted to try to sort the wheat from the chaff in both the post and the comments without spending all day doing so, but in an hour I didn't manage to get far at all into the whole thing. Instead of a point-by-point commentary, then, here's more of a summary with comment.

I take his key claim to be this:

Cogburn makes two claims here. Not only that it is better to be able but also that it is OK to speak in ways that presuppose this. The English language comes very close to presupposing this, so Cogburn is more or less defending the language that even anti-ableist-language people speak. There is language and there is language, of course, as in English, on the one hand, and the language of exclusion, say, on the other. But the idea that it is undesirable to be blind, deaf, lame, weak, sick, insane, and so on and so on runs deep in ordinary English. Could this change? Surely it could. Should it? That is the question. Or one of the questions. Another is how bad it is to argue that speaking in such ways, ways that presuppose the badness of blindness, etc., "is not bad."

A caveat is probably necessary, or even overdue, at this point. Cogburn has been accused, among other things, of defending hate speech, so I should address this thought. He is not defending attacks on disabled people. He is not attacking disabled people. He is defending some linguistic expressions of the idea that all else being equal it is better to be able. These expressions might harm disabled people (by perpetuating harmful prejudices) or fail to benefit them as much as some alternative expressions would (by countering those prejudices), but Cogburn's claim is that no use of language should be condemned simply on the grounds that it involves the presupposition that disabilities are generally bad things to have.

Roughly his claim is that the presupposition is true, and therefore ought to be allowed, and that it is patronizing to disabled people to think that they need protection from words that are only imagined to be hurtful to their feelings. The claim against him (again roughly, and there are multiple claims against him) is that some speech directed at disabled people really is hurtful, even when it's intended to be sympathetic, and that the kind of speech in question creates an environment, a kind of society, that is detrimental to the interests of disabled people whether they feel it or not, and whether it is intended or not. It is this that is the more interesting claim, I think,because Cogburn agrees that disabled people should not be insulted or patronized.

As I see it, two questions arise here. Is it lying to say that it is not better to be able (other things being equal)? And if so, is this a noble lie?

The idea that it is a noble lie would depend on a form of utilitarianism combined with faith in the possibility of linguistic engineering. There is something obviously Orwellian about this idea, but something seemingly naive too. If we start calling blind people visually impaired instead how much will change? I have no objection at all to making such changes if the people they are intended to help like them. Presumably Cogburn doesn't object to this kind of change either. But if all we do is to change the vocabulary we use without changing the grammar then sooner or later 'visually impaired' will be used exactly the same way that 'blind' is now used, and will have exactly the same meaning, connotations, etc. These superficial linguistic changes, i.e. changes in vocabulary or diction only, will not effect deep grammatical change (by what mechanism would they do so?). Superficial changes can have deep effects, as when disfiguring someone's face leads to people treating them much worse, but it isn't obvious that changing labels will have good effects. Nor will they change anyone's ability to see.

Which brings us to the question whether that matters. Is it bad to be blind, or worse to be blind than to be sighted? In "Practical Inference" Anscombe writes:

I don't think I would bother trying to do anything about a disability that did not lead to suffering, that much is true. But some conditions surely lead to suffering more often than not. Some of this will be fairly direct. My father's muscular dystrophy leads to his falling down from time to time. This hurts. And some of the suffering is less direct, involving other people's attitudes and reactions. Falling in public is embarrassing, but would not be if people were better. So should we fix the physical and mental problems (i.e. conditions that lead to suffering) that people have when we can or should we fix other people, the ones who regard or treat the suffering badly? Surely so far as we can, other things being equal, we should do both. And medical advances are more dependable than moral ones.

We might argue at great length about what is and is not a disability, but that some people are more prone to suffering than most because of the condition of their bodies or minds is surely beyond doubt. Pretending to deny this isn't going to help anybody.

I feel as though I've been rushing things towards the end of this post, but I also don't want to keep writing and writing without ever posting the results. One thing that encourages me to keep writing is the desire to be careful to avoid both genuine offensiveness (bad thinking) and causing offense by writing ambiguously or misleadingly (bad writing). But then I think that what I'm saying, or at least what I mean to say, is just so obviously right that no one could possibly disagree. What happened at New APPS shows that this is false. It also shows that this is very much a live (as in 'live explosive') issue, and one that brings questions about consequentialism, relativism, Aristotelian naturalism, and ordinary language together in ways that can be very personal and political. I don't mean that it's Anscombe versus the remaining New APPS people, as if one had to pick one of those two sides, but it would be interesting to see a debate like that.

I take his key claim to be this:

all else being equal, it is better to be able. Speaking in ways that presuppose this is not bad, at least not bad merely in virtue of the presuppositionThat it is better to be able than disabled is surely close to being analytic, although of course one might disagree about its always being better to be "able" than "disabled." (That is, so-called abilities might not be so great and so-called disabilities might not be so bad, but actual disabilities can hardly fail to be at least somewhat bad.) Perhaps disability has spiritual advantages over ability (I don't think it does, but someone might make that claim) but in ordinary, worldly terms disabilities are bad. Hence the prefix 'dis'.

Cogburn makes two claims here. Not only that it is better to be able but also that it is OK to speak in ways that presuppose this. The English language comes very close to presupposing this, so Cogburn is more or less defending the language that even anti-ableist-language people speak. There is language and there is language, of course, as in English, on the one hand, and the language of exclusion, say, on the other. But the idea that it is undesirable to be blind, deaf, lame, weak, sick, insane, and so on and so on runs deep in ordinary English. Could this change? Surely it could. Should it? That is the question. Or one of the questions. Another is how bad it is to argue that speaking in such ways, ways that presuppose the badness of blindness, etc., "is not bad."

A caveat is probably necessary, or even overdue, at this point. Cogburn has been accused, among other things, of defending hate speech, so I should address this thought. He is not defending attacks on disabled people. He is not attacking disabled people. He is defending some linguistic expressions of the idea that all else being equal it is better to be able. These expressions might harm disabled people (by perpetuating harmful prejudices) or fail to benefit them as much as some alternative expressions would (by countering those prejudices), but Cogburn's claim is that no use of language should be condemned simply on the grounds that it involves the presupposition that disabilities are generally bad things to have.

Roughly his claim is that the presupposition is true, and therefore ought to be allowed, and that it is patronizing to disabled people to think that they need protection from words that are only imagined to be hurtful to their feelings. The claim against him (again roughly, and there are multiple claims against him) is that some speech directed at disabled people really is hurtful, even when it's intended to be sympathetic, and that the kind of speech in question creates an environment, a kind of society, that is detrimental to the interests of disabled people whether they feel it or not, and whether it is intended or not. It is this that is the more interesting claim, I think,because Cogburn agrees that disabled people should not be insulted or patronized.

As I see it, two questions arise here. Is it lying to say that it is not better to be able (other things being equal)? And if so, is this a noble lie?

The idea that it is a noble lie would depend on a form of utilitarianism combined with faith in the possibility of linguistic engineering. There is something obviously Orwellian about this idea, but something seemingly naive too. If we start calling blind people visually impaired instead how much will change? I have no objection at all to making such changes if the people they are intended to help like them. Presumably Cogburn doesn't object to this kind of change either. But if all we do is to change the vocabulary we use without changing the grammar then sooner or later 'visually impaired' will be used exactly the same way that 'blind' is now used, and will have exactly the same meaning, connotations, etc. These superficial linguistic changes, i.e. changes in vocabulary or diction only, will not effect deep grammatical change (by what mechanism would they do so?). Superficial changes can have deep effects, as when disfiguring someone's face leads to people treating them much worse, but it isn't obvious that changing labels will have good effects. Nor will they change anyone's ability to see.

Which brings us to the question whether that matters. Is it bad to be blind, or worse to be blind than to be sighted? In "Practical Inference" Anscombe writes:

Aristotle, we may say, assumes a preference for health and the wholesome, for life, for doing what one should do or needs to do as a certain kind of being. Very arbitrary of him.Ignoring her irony, is it arbitrary? Is it bad or simply different to have 31 or 33 teeth rather than the standard 32? Are two legs better than one? One comment at New APPS says that it is not disability but suffering that is bad. Is that what we should say?

I don't think I would bother trying to do anything about a disability that did not lead to suffering, that much is true. But some conditions surely lead to suffering more often than not. Some of this will be fairly direct. My father's muscular dystrophy leads to his falling down from time to time. This hurts. And some of the suffering is less direct, involving other people's attitudes and reactions. Falling in public is embarrassing, but would not be if people were better. So should we fix the physical and mental problems (i.e. conditions that lead to suffering) that people have when we can or should we fix other people, the ones who regard or treat the suffering badly? Surely so far as we can, other things being equal, we should do both. And medical advances are more dependable than moral ones.

We might argue at great length about what is and is not a disability, but that some people are more prone to suffering than most because of the condition of their bodies or minds is surely beyond doubt. Pretending to deny this isn't going to help anybody.

I feel as though I've been rushing things towards the end of this post, but I also don't want to keep writing and writing without ever posting the results. One thing that encourages me to keep writing is the desire to be careful to avoid both genuine offensiveness (bad thinking) and causing offense by writing ambiguously or misleadingly (bad writing). But then I think that what I'm saying, or at least what I mean to say, is just so obviously right that no one could possibly disagree. What happened at New APPS shows that this is false. It also shows that this is very much a live (as in 'live explosive') issue, and one that brings questions about consequentialism, relativism, Aristotelian naturalism, and ordinary language together in ways that can be very personal and political. I don't mean that it's Anscombe versus the remaining New APPS people, as if one had to pick one of those two sides, but it would be interesting to see a debate like that.

Friday, September 5, 2014

Poor fellow

I was surprised by the reaction to Robin Williams' suicide, which saw some people reacting as if to the death of a personal friend and others as if to the death of the very personification of humor. I didn't feel that way at all. Then people started saying he was killed by depression and that we all need to know and understand more about this disease. This bothers me, although I'm not sure I can put my finger on why. Partly it's that if someone is ill then the solution might seem to be technical and so we don't have to worry about treating them like a human being. Or rather, I suppose, we don't have to worry about treating their depression as an emotion. We don't need to cheer the depressed person up or attempt empathy. In fact it would be a mistake to do so, a symptom of blameworthy ignorance. So we just shove them towards a doctor and wait till they are fixed before relating to them as normal again. This strikes me as an uncaring form of 'concern', although I've seen it come from people who clearly do care as well as those who I can't believe really do. It is what we are taught to think.

One thing that pushes us this way is the desire to deny that people who kill themselves are being selfish or cowardly. It wasn't a choice, the pain made him do it, people say. But of course suicide is a choice. I think it's bizarre, at least in cases like Williams', to call it selfish or cowardly. How much unhappiness are people supposed to put up with? How much was he living with? More than he could take, obviously. But it's insulting to deny that he acted of his own free will. Why can't the decision to commit suicide be accepted and respected? I don't mean by people who really knew him--far be it from me to tell them what they can or should accept--but by strangers and long-distance fans.

Because it's so horrible, I suppose. And that's why it's considered selfish. You are supposed to keep the horribleness inside you, quarantining it until it can be disposed of by a doctor, or talk it out therapeutically. Not put it in the world. Don't bleed on the mat, as they used to say unsympathetically at the judo class my brother and sister went to. But that is selfish. If you have to bleed, bleed. People often say that we should not bottle up our emotions, that men should not be afraid to cry, and so on. This, I think, is partly right and partly based on a mistaken idea about the effects of expressing painful emotions, namely that once they are expressed they will be gone. But this is not true. They don't go away once let out of the bottle. It's partly also, though, a kind of hypocrisy. We don't actually want to see people's emotions, not the really bad ones. No doubt we do want to see some emotions, including painful ones, and quite possibly more than are often on display, but there is a reason why we don't wear our hearts on our sleeves.

It's hard to think well about suicide. Here's Chesterton:

One thing that pushes us this way is the desire to deny that people who kill themselves are being selfish or cowardly. It wasn't a choice, the pain made him do it, people say. But of course suicide is a choice. I think it's bizarre, at least in cases like Williams', to call it selfish or cowardly. How much unhappiness are people supposed to put up with? How much was he living with? More than he could take, obviously. But it's insulting to deny that he acted of his own free will. Why can't the decision to commit suicide be accepted and respected? I don't mean by people who really knew him--far be it from me to tell them what they can or should accept--but by strangers and long-distance fans.

Because it's so horrible, I suppose. And that's why it's considered selfish. You are supposed to keep the horribleness inside you, quarantining it until it can be disposed of by a doctor, or talk it out therapeutically. Not put it in the world. Don't bleed on the mat, as they used to say unsympathetically at the judo class my brother and sister went to. But that is selfish. If you have to bleed, bleed. People often say that we should not bottle up our emotions, that men should not be afraid to cry, and so on. This, I think, is partly right and partly based on a mistaken idea about the effects of expressing painful emotions, namely that once they are expressed they will be gone. But this is not true. They don't go away once let out of the bottle. It's partly also, though, a kind of hypocrisy. We don't actually want to see people's emotions, not the really bad ones. No doubt we do want to see some emotions, including painful ones, and quite possibly more than are often on display, but there is a reason why we don't wear our hearts on our sleeves.

It's hard to think well about suicide. Here's Chesterton:

Under the lengthening shadow of Ibsen, an argument arose whether it was not a very nice thing to murder one's self. Grave moderns told us that we must not even say "poor fellow," of a man who had blown his brains out, since he was an enviable person, and had only blown them out because of their exceptional excellence. Mr. William Archer even suggested that in the golden age there would be penny-in-the-slot machines, by which a man could kill himself for a penny. In all this I found myself utterly hostile to many who called themselves liberal and humane. Not only is suicide a sin, it is the sin. It is the ultimate and absolute evil, the refusal to take an interest in existence; the refusal to take the oath of loyalty to life.I think he's right that there is something monstrous about suicide, and there is something really nightmarish about Archer's idea. But what I want to do is to say "poor fellow." Not to praise nor to blame. And not to regard suicides as anything other than fellows, with as much free will as the rest of us. And along with that sympathy to feel some relief that the person's suffering is over.

Monday, September 1, 2014

Understanding human behavior

A question that came up prominently during the seminar in Mexico has been discussed recently also by Jon Cogburn at NewAPPS. The question is about what is involved or required for understanding human behavior.

Jon Cogburn says that:

Winch says that being able to predict what people are going to do does not mean that we really understand them or their activity. He cites Wittgenstein's wood-sellers, who buy and sell wood according to the area covered without regard to the height of each pile. We can describe their activity and perhaps predict their behavior but we don't, according to Winch, really understand it. He surely has a point. Here's a longish quote (from pp. 114-115 of the linked edition, pp. 107-108 of my copy):

But does Winch get to say what counts as genuine understanding? This was a point we discussed when I was at the University of Veracruz. Several students there seemed to want less than what Winch would accept as true understanding of human behavior. They did not want to empathize. They wanted to identify patterns, and if they were able to do so well enough to be able to make accurate predictions then they would be very satisfied. A "rather puzzling external account of certain motions" is basically what they were hoping to produce, as long as it allowed them to make accurate predictions.

It looks to me as though Winch would resist or even reject such a desire, but can a desire be mistaken? And I don't know how to settle the apparent disagreement between Winch and others about what is and is not real understanding. Can we just say that as long as you see the facts you may say what you like? Or is that too easy?

Jon Cogburn says that:

One could say that given a set of discourse relevant norms held fixed, understanding in general just is the ability to make novel predictions. For Davidson/Dennett, we assume that human systems are largely rational according to belief/desire psychology and then this puts us in a position to make predictions about them. We make different normative assumptions about functional organization of organs, and different ones again about atoms. But once those are in place, understanding is just a matter or being better able to predict.I'm writing this as a post of my own rather than as a comment at NewAPPS partly because I suspect it will be too long for a comment and partly because I don't know what all of this means and don't want to appear snarky or embarrassingly ignorant. I genuinely (non-snarkily) don't know what it means to hold fixed a set of discourse relevant norms, nor what it means to put normative assumptions in place. But what I take Cogburn to be saying is, in effect, that "understanding in general just is the ability to make novel predictions."

Winch says that being able to predict what people are going to do does not mean that we really understand them or their activity. He cites Wittgenstein's wood-sellers, who buy and sell wood according to the area covered without regard to the height of each pile. We can describe their activity and perhaps predict their behavior but we don't, according to Winch, really understand it. He surely has a point. Here's a longish quote (from pp. 114-115 of the linked edition, pp. 107-108 of my copy):

Winch talks elsewhere (see p. 89, e.g.) about what it takes for understanding to count as genuine understanding: reflective understanding of human activity must presuppose the participants' unreflective understanding. So the concepts that belong to the activity must be understood. Without such understanding all we will generate is (p. 88) "a rather puzzling external account of certain motions which certain people have been perceived to go through."Some of Wittgenstein’s procedures in his philosophical elucidations reinforce this point. He is prone to draw our attention to certain features of our own concepts by comparing them with those of an imaginary society, in which our own familiar ways of thinking are subtly distorted. For instance, he asks us to suppose that such a society sold wood in the following way: They ‘piled the timber in heaps of arbitrary, varying height and then sold it at a price proportionate to the area covered by the piles. And what if they even justified this with the words: “Of course, if you buy more timber, you must pay more”?’ (38: Chapter I, p. 142–151.) The important question for us is: in what circumstances could one say that one had understood this sort of behaviour? As I have indicated, Weber often speaks as if the ultimate test were our ability to formulate statistical laws which would enable us to predict with fair accuracy what people would be likely to do in given circumstances. In line with this is his attempt to define a ‘social role’ in terms of the probability (Chance) of actions of a certain sort being performed in given circumstances. But with Wittgenstein’s example we might well be able to make predictions of great accuracy in this way and still not be able to claim any real understanding of what those people were doing. The difference is precisely analogous to that between being able to formulate statistical laws about the likely occurrences of words in a language and being able to understand what was being said by someone who spoke the language. The latter can never be reduced to the former; a man who understands Chinese is not a man who has a firm grasp of the statistical probabilities for the occurrence of the various words in the Chinese language. Indeed, he could have that without knowing that he was dealing with a language at all; and anyway, the knowledge that he was dealing with a language is not itself something that could be formulated statistically. ‘Understanding’, in situations like this, is grasping the point or meaning of what is being done or said. This is a notion far removed from the world of statistics and causal laws: it is closer to the realm of discourse and to the internal relations that link the parts of a realm of discourse.

But does Winch get to say what counts as genuine understanding? This was a point we discussed when I was at the University of Veracruz. Several students there seemed to want less than what Winch would accept as true understanding of human behavior. They did not want to empathize. They wanted to identify patterns, and if they were able to do so well enough to be able to make accurate predictions then they would be very satisfied. A "rather puzzling external account of certain motions" is basically what they were hoping to produce, as long as it allowed them to make accurate predictions.

It looks to me as though Winch would resist or even reject such a desire, but can a desire be mistaken? And I don't know how to settle the apparent disagreement between Winch and others about what is and is not real understanding. Can we just say that as long as you see the facts you may say what you like? Or is that too easy?

Labels:

New APPS,

Peter Winch,

social science,

Wittgenstein

Subscribe to:

Posts (Atom)

.JPG)