[UPDATE: thanks to dmf this post is now being discussed over at the

Daily Nous. If you want to read some very interesting responses to the use of group work in philosophy classes head over there.]

I often wonder what the point of classes is. Not just rhetorically, but actually, the idea being that if we know why we have classes then this might help us do a better job with them. (I also sometimes wonder in the rhetorical sense, because when I was an undergraduate I was actively discouraged from attending lectures and yet many classes are so large that lecturing is more or less inevitable.)

The point, it seems to me, is for students to interact with an expert on the material they are studying. They get to ask questions about assigned readings that they did not understand. They get some kind of (possibly very short) lecture on this reading so that if they only

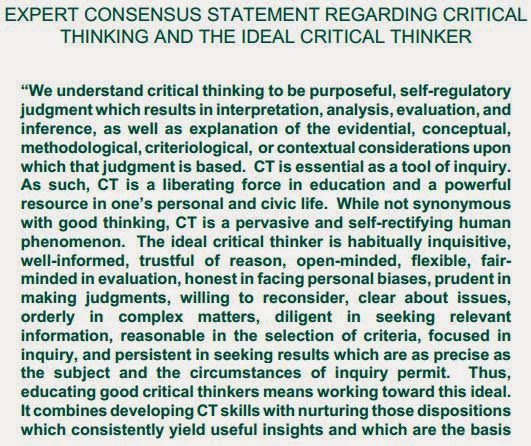

think they understood it they will be corrected. They get to ask questions about the relevant issues, and probably some kind of lecture on these issues. And hopefully some kind of discussion of the issues will either happen of its own accord in the process of all this or, more likely, will be made to happen by the teacher. The point of this discussion being to get students to think more, more carefully, and in a more informed way, than they otherwise would, about either issues that matter or issues that it is somehow useful to think about. (For instance, an issue might not matter in itself but debates about it might be historically or politically important, or it might be an issue debating which is thought to develop certain intellectual skills. Nothing turns on whether the weights in the gym are up or down, but moving them up and then down again can be very beneficial. If there is an intellectual equivalent (a very big if, of course) then academic work, including discussion, might well be it, or at least an ingredient of it.) Ideally this will all happen in a way that feels natural to the students, so that it connects as seamlessly as possible with the rest of their lives. Then discussing ideas, asking questions, reading, and otherwise exploring the world intellectually can become greater parts of their lives.

I think its apparent unnaturalness is why group-work feels so wrong to me. As far as I can tell, though, it's becoming the norm. See comments

here and

here, for instance, and the reference to "structured activity"

here. (And while you're at it, see

this comment for some of the problems with group-work.) I'm also going by what I've seen other teachers do--increasingly it seems to involve group-work and student presentations. So, why do I think this is so bad?

The first thing to say is there is a real problem of ambiguity and possible misunderstanding here. Not all lectures, or things that people call lectures, are the same, and not all group-work or structured in-class activities are the same either. The second thing to say is that I'm not defending lectures. I think they are largely a waste of time. When I was an undergraduate we were told not to go to lectures on the grounds that you can learn more, and more efficiently, by reading. Lectures were presented as a remedial option. I think they can be useful in this way, and when my students just seem lost I do resort to lecturing. But I see it as a sign that some failure has occurred, not as a go-to option. Enough about me though. On to complaining about other people.

Here are problems with group-work that I have observed or heard about multiple times from students:

- the members of the group (unless the group is the whole class) do not include an expert on either the topic for discussion or the assigned reading on it, so mistakes can go uncorrected and misunderstanding can be increased (if plausibly, confidently, or charismatically defended)

- there can be a tendency for everyone in a group to want to get along and agree, so that diversity of opinion (which is sometimes healthy and at least indicative of independent thought) can be replaced by a kind of groupthink, in which the better (or better-supported) ideas by no means always win out

- neither every student nor even every group engages in the exercise seriously or at all (policing can help here, of course, but is not likely to be 100% effective, and brings its own problems simply by making the teacher take on the role of police officer)

- groups can be dominated by loudmouths (although they might also be more comfortable environments for some students to speak in)

- the whole thing can feel like a waste of time

The first of these problems is probably less serious at more selective places. If everyone in the group has a

decent grasp of the issues, ideas, facts, etc. then the wisdom of the crowd might drive out individual kinks of ignorance and misunderstanding. But if enough students have not done the reading, or not done it carefully, or done it but without sufficient comprehension, then trouble lies ahead.

The problem of the whole thing feeling like a waste of time could be addressed by explaining why it isn't, but this would require being able to do that. It might be enough to say, "Trust me, the discussion will be much better afterwards." But why should students trust the person who says this? If they are an expert on philosophy, what do they know about educational psychology? And, in fact, what proof is there that discussion is valuable, let alone group-work intended to improve discussion? I think discussion is part of the examined life, but there's no

evidence to support that claim. There might be evidence that it helps with remembering facts, but if it does, so what? Memorizing facts is not what the liberal arts claim to be about. It certainly isn't what philosophy is about, anyway.

The biggest problem, though, has to do with the

suggestion made here that such activities feel forced and unnatural. They

are, after all, forced and unnatural. They involve the teacher's going from being a resident expert there to help students in his/her area of expertise to being a classroom manager, manipulating students for their own good. Class is no longer (if it ever was) a place where a conversation takes place between people who (at least might) care about ideas and books. It is now a place where learning is facilitated. Of course the change is not from black to white, but students seem a bit more patronized in the new way of doing things, and the ideas (literature, arguments, whatever) being taught seem a bit more remote from life, a bit less like things that anyone might actually care about when off duty. It seems a shame to me.

Having said all that, I am a strong believer in doing what works, and I think that if we're qualified to judge work in our areas, as we (professional teachers) surely are, then we can also judge when a discussion is going well or not, and whether it is going better or worse than past discussions. So if a little bit of group-work really does improve discussion then I'm all for it. But there is a downside that should not be completely ignored. And I don't think that group-work should be done just because it's the latest thing or because it helps fill up the time we are required to spend in class (as I suspect is sometimes the case).

No doubt a thousand grumpy old men have said much the same thing before. What I hope might be new is the ethical angle. Patronizing and manipulating people should be avoided as much as possible. And there is a great evil in the world that might be called 'management' (or 'bureaucracy' or 'assessment' or whatever you like to call it), replacing freedom, individuality, and spontaneity with various systems of control. There is, it seems to me, a real danger that classroom management might be part of this problem.

.JPG)